Suno is an AI service that generates music with vocals using keywords and genre tags in mind. It’s so famous that it’s been featured on some TV and radio programs, and it’s so popular that I think many people have used it at least once. A model that can compete with this locally, “HeartMuLa,” has been released, so we would like to provide a trial report.

It’s Heartmula! ?

Released on January 14, 2026 “heart mullet” is a music generation AI developed by a research team centered on Peking University, and consists of the following four models.

- HeartCLAP: Audio and text alignment model

- HeartCodec: Music codec tokenizer

- HeartMuLa: Music generation model. Supports music generation up to 6 minutes long

-English, Chinese, Japanese, Korean, Spanish

-Synthesize music under controlled conditions, including style descriptions, lyrics, and reference audio - HeartTranscriptor: Lyrics recognition model

In fact, the music generation introduced this time uses HeartCLAP + HeartCodec + HeartMuLa. HeartTranscriptor is for considering lyrics (text) from music.

HeartCLAP+HeartCodec+HeartMuLa evaluates two types of data: “lyrics” and “style tags.” The latter allows you to specify music genres such as rock and J-Pop, as well as musical instruments such as piano. It would be too long to list all style tags, sohereIt is summarized in Those who are interested should refer to it.

In other words, you can specify lyrics and style tags and it will generate music with vocals…a local version of Suno.

What’s more noteworthy is that it supports Japanese! If you insert Japanese lyrics, both female and male vocals will sing in fluent Japanese.

The model ishereThere are currently two types of 3B registered.

3B-20260123

HeartMuLa/HeartMuLa-RL-oss-3B-20260123

HeartMuLa/HeartCodec-oss-20260123

3B

HeartMuLa/HeartMuLa-RL-oss-3B

HeartMuLa/HeartCodec-oss

common

HeartMuLa/HeartMuLaGen

HeartMuLa/HeartTranscriptor-oss

I tried both 3B and 3B-20260123, and the latter seems to have a temporary style tag or a little better!repositoryTo summarize,

- Accurate declaration with style specified by tag

- Music genre, mood, designated instruments, etc. are reflected more clearly.

This seems to be consistent with the previous trial experience. Therefore, from the viewpoint, 3B-20260123 is recommended.

Also, although it has not been released at the time of writing, there is also 7B, and according to sources, this one seems to be attracting a lot of attention.

Until now, I had posted some locally generated music videos (MVs), but all the essential music was made with Suno.

With the advent of HeartMuLa, this can now be generated truly 100% locally! …That’s the topic for today. I’ll explain step-by-step, but it’s becoming possible to do it 100% locally, and it’s nice to be able to make a music video without worrying about point consumption or usage restrictions, but it’ll fade over time… (lol)

Challenge with ComfyUI

First of all, ComfyUIHeartMuLa custom node is available from the official websiteSo try using this.

$ cd ComfyUI/custom_nodes/

$ git clone https://github.com/benjiyaya/HeartMuLa_ComfyUI

$ cd HeartMuLa_ComfyUI

$ pip install -r requirements.txt

Download the model

$ cd ComfyUI/models/

$ hf download HeartMuLa/HeartMuLaGen --local-dir ./HeartMuLa

$ hf download HeartMuLa/HeartMuLa-RL-oss-3B-20260123 --local-dir ./HeartMuLa/HeartMuLa-RL-oss-3B-20260123

$ hf download HeartMuLa/HeartCodec-oss-20260123 --local-dir ./HeartMuLa/HeartCodec-oss-20260123

$ hf download HeartMuLa/HeartTranscriptor-oss --local-dir ./HeartMuLa/HeartTranscriptor-oss

Download the above four files. If you want to try the old 3B as well (you can switch between nodes), you can also download the following two.

$ hf download HeartMuLa/HeartMuLa-oss-3B --local-dir ./HeartMuLa/HeartMuLa-oss-3B

$ hf download HeartMuLa/HeartCodec-oss --local-dir ./HeartMuLa/HeartCodec-oss

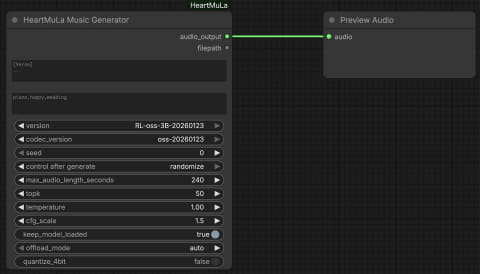

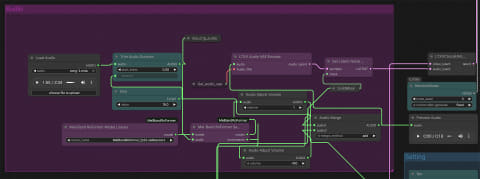

The workflow with ComfyUI is simple, just connect Preview Audio from HeartMuLa Music Generator.

However, this does not create a song that is exactly 4 minutes long, but instead generates a song based on the content of the lyrics, so it often ends up being less than this. On the other hand, if it is 60 or something like that, it will often end up being a cut-off dragonfly.

Who made the lyrics, which I quickly jotted down in notes, added style tags, and confirmed how they worked? A problem occurs.

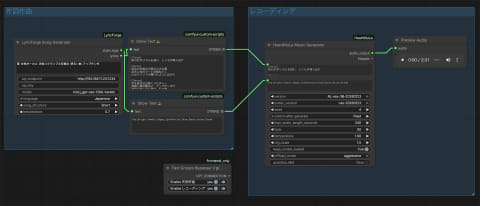

That’s when I came up with Custom Node, which automatically creates lyrics and style tags based on keywords. Of course, I will also use LLM.

Analyze the repository using Claude code, create a system prompt that can generate lyrics and style tags from keywords, and organize them into a custom node.Published to GitHub.

LLM specifies an endpoint in OpenAI API format. In some cases, I use LM Studio + gpt-oss-120b running on M4 Max, but here there is no problem with smaller models such as 20b as long as you can understand Japanese. Please set it according to your environment.

When I tried “Shibuya Scramble Crossing Bright Song Up Tempo with Snowy Female Vocals”, the following output was obtained.

[Intro]

The city lights beat out the rhythm, and the synth begins to play.

[Verse]

A little snow falling at the Shibuya crossing

The beat of light rises at my feet

My heart expands like a window opens

[Chorus]

A melody that you can reach if you reach out

Smiles and the wind will dance at an uptempo

The white constellations drawn in the night sky, my song

[Verse]

My heart pounds every time the traffic light turns green.

Even in the crowd of people, you’re the only one I can see

Snowflakes are rhythmic accents

[Chorus]

A story connected by light and sound

Run brightly, to the stars of Shibuya

Carve this moment forever

[Outro]

Let’s keep singing until the last chord disappears

pop, bright, woman, happy, synthesizer, drum, dance, asia, dream

With this, I can manage even if I can’t write lyrics (lol). Below is the music generated by HeartMuLa Music Generator (images are still images + MP3-like videos).

A song generated by HeartMuLa Music Generator (2:31) with “Yuki Female Vocalist Shibuya Scramble Crossing Bright Song Up Tempo” and “Pop, Bright, Female, Happy, Synthesizer, Drum, Dance, Asian, Dream”. Approximately 99 seconds on GeForce RTX 5090

What do you think? If you use GeForce RTX 5090, you can create 2 minutes and 31 seconds of music in about 99 seconds. The female vocals are also quite good (from a music person’s point of view, it’s great because it requires pitch adjustment! lol). Speaking of playing in a band for a while, like in the past, composing music can be done locally using chord progressions, even for people who can’t write lyrics.

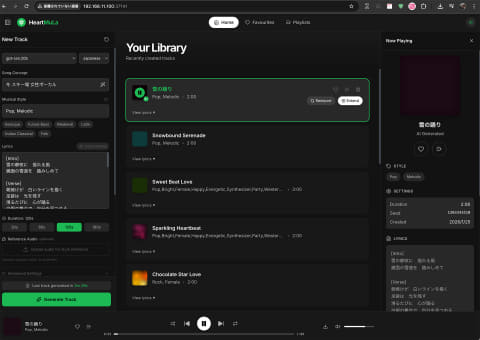

Use HeartMuLa-Studio, which looks just like Snow

After playing in the previous environment for a while, you will see a UI that looks exactly like Sun.HeartMuLa-Studiohas been published. As you can see from the capture, the UI is really similar to Sun! (lol).

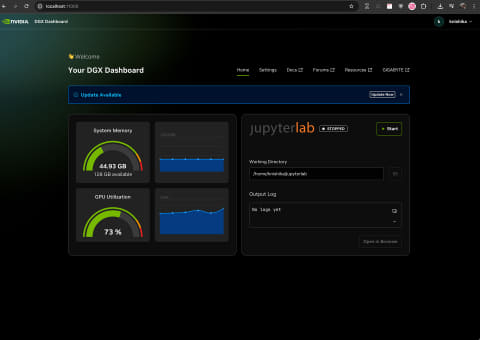

As for how to start, if you use Docker, Python (

Starting the backend) and npm (frontend) from the CLI… There are two patterns. We confirmed operation with the former in a Windows environment and the latter with a DGX Spark compatible machine.

I have added it to the question because I came across a point that I was concerned about someday,

- HeartCodec runs on CPU unconditionally even on single GPU = slow

- Even though I am using HeartMuLa-RL-oss-3B-20260123, the Codec is HeartCodec-oss. -Need to match 20260123

I want to execute 1 with CUDA if I have enough VRAM capacity. The corrected parts arehere816 journeys of

else:

# Without quantization, use lazy loading - codec stays on CPU

pipeline = HeartMuLaGenPipeline.from_pretrained(

model_path,

device={

"mula": torch.device("cuda"),

"codec": torch.device("cpu"), # "cuda"へ

},

dtype={

Start with 4bit conversion and offload set to false.

HEARTMULA_4BIT=false HEARTMULA_SEQUENTIAL_OFFLOAD=false python -m uvicorn backend.app.main:app --host 0.0.0.0 --port 8000

2 is in the same code,here33 journeys of

Although HeartMuLa-oss-3B and HeartMuLa-RL-oss-3B-20260123 are switched using MODEL_VERSIONS, HF_HEARTCODEC_REPO = “HeartMuLa/HeartCodec-oss” is fixed. If you want to use -20260123, change this to “HeartMuLa/HeartCodec-oss-20260123”.

And it’s like this. This is information at the time of writing, so I don’t know if it will be revised yesterday. Line numbers are for reference only.

Before starting the app, you need to first modify Ollama on the mode server and pull the appropriate LLM.

Currently, it looks good, but it’s a bit troublesome to start up, and what you can do isn’t much different from what you can do with ComfyUI (just a list of the songs you’ve created?), so I personally recommend working on HeartMuLa in the ComfyUI environment.

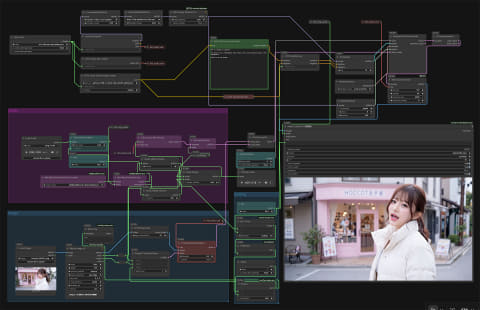

Create a 100% locally generated MV

there’s only one thing to do when you can make music! The music video will be produced using LTX-2 S2V (lol).

- Create music and know the start/end time of the first verse, second verse, chorus, etc.

- Example: A melody, B melody, chorus. If you want to leave a video here, I have prepared 3 images of the idea. If continuity is required, such as a face, use Face LoRA (in this case) or use an editing model to generate the image.

- S2V for the amero part, S2V for the bumelo part, S2V for the chorus part only.

- At this time, check if there is any misalignment by playing it simultaneously with the original sound source (if it is misaligned, there will be an echo in the sound)

And it’s like this. First of all, as before, only the music created with ComfyUI+ComfyUI-LyricForge+HeartMuLa Music Generator will be posted in full (the video is fixed).

Full music created with ComfyUI+ComfyUI-LyricForge+HeartMuLa Music Generator (video is fixed). It’s a little early, but it’s a chocolate song (lol). 2:33

We have prepared awareness images for each part below. This time there are 6 parts, so there are 6, but since the beginning and end are the same, there are actually 5 in total. I’m not making 5 pieces at once because I’m actually looking at the atmosphere as I continue.

The workflow of LTX-2 S2V is as follows. The workflow json was zipped.hereI will post it here so please use it as a reference.

The basic parts of LTX-2 are:

- The model should not use the distilled version, but the base model + distilled LoRA (0.6)

- Normally, the size is 1/2 of the specified resolution in 8 steps + 3 steps of x2 after that, in other words, it is upscaled to return to the original resolution, but here the resolution is set to 8 steps as specified.

These two points. 1 feels like the distilled model is too strong momentarily, and the base model + distilled LoRA (0.6) is weak.

2 has the feeling that the resolution would be degraded by the normal method, so I just generated it at the specified resolution.

Most of them are LTX-2 I2V, but there is an audio part with a red background in the upper left corner that is unique to S2V.Melvan DroformerThis model is used to separate vocals and performances. When you look at the Mel-Band RoFormer Sampler, you can see that there are two outputs: vocals and instruments.

Each has an Audio Adjust Volume, 1 for vocals and -100 for performances.

However, depending on the conscious image and the song, the rhythm may not be felt unless the performance is added lightly, and the drum sticks may not match (in this case, it went to -3 during the interlude), so there are cases where adjustments need to be made between the music and the output video.

The only app I have on hand is iMovie, which I use to edit the 6 videos. It’s mainly a concatenation, but since the sound inevitably lags, it becomes a tedious task to make each part a little longer = widen the margins, play while placing the original sound source, and adjust the connections… (if there is a lag, echoes etc. will occur). If this could be connected without adjustment, the work time could be greatly reduced…

After eliminating the discrepancies, delete the sound on the video side, use the original audio as is, and output as MP4. At this time, it will be HD, so use the app (Unsqueeze https://apps.apple.com/jp/app/unsqueeze/id6475134617) to upscale it to full HD.

Songwriting, vocals/performances, thought images, lip sync videos…100% locally generated MV

There are still a few parts that I’m concerned about, but I’ve been playing the video while changing the consciousness images and prompts, and there are some connections, etc., etc. It’s been 8 hours so far (lol). Although it takes about 2 minutes using AI, it is actually not a fun task.

Although there are some disappointing parts such as broken borders and fingers due to the fact that it was initially generated in HD resolution, I think you will enjoy being able to do it 100% locally on your PC.

As mentioned above, with the advent of HeartMuLa, it is now possible to produce music videos completely 100% locally, without using snowboarding at all.

No way, this is the state as of January 2026. What will things be like by the end of this year? I have no idea. People who like this kind of production will spend a lot of time melting it (lol).

![[Kazuhisa Nishikawa’s irregular column]Can you realize that snow on your local PC? I want you to listen to the songs created with “HeartMuLa” – PC Watch [Kazuhisa Nishikawa’s irregular column]Can you realize that snow on your local PC? I want you to listen to the songs created with “HeartMuLa” – PC Watch](https://asset.watch.impress.co.jp/img/pcw/docs/2081/382/C00_l.jpg)